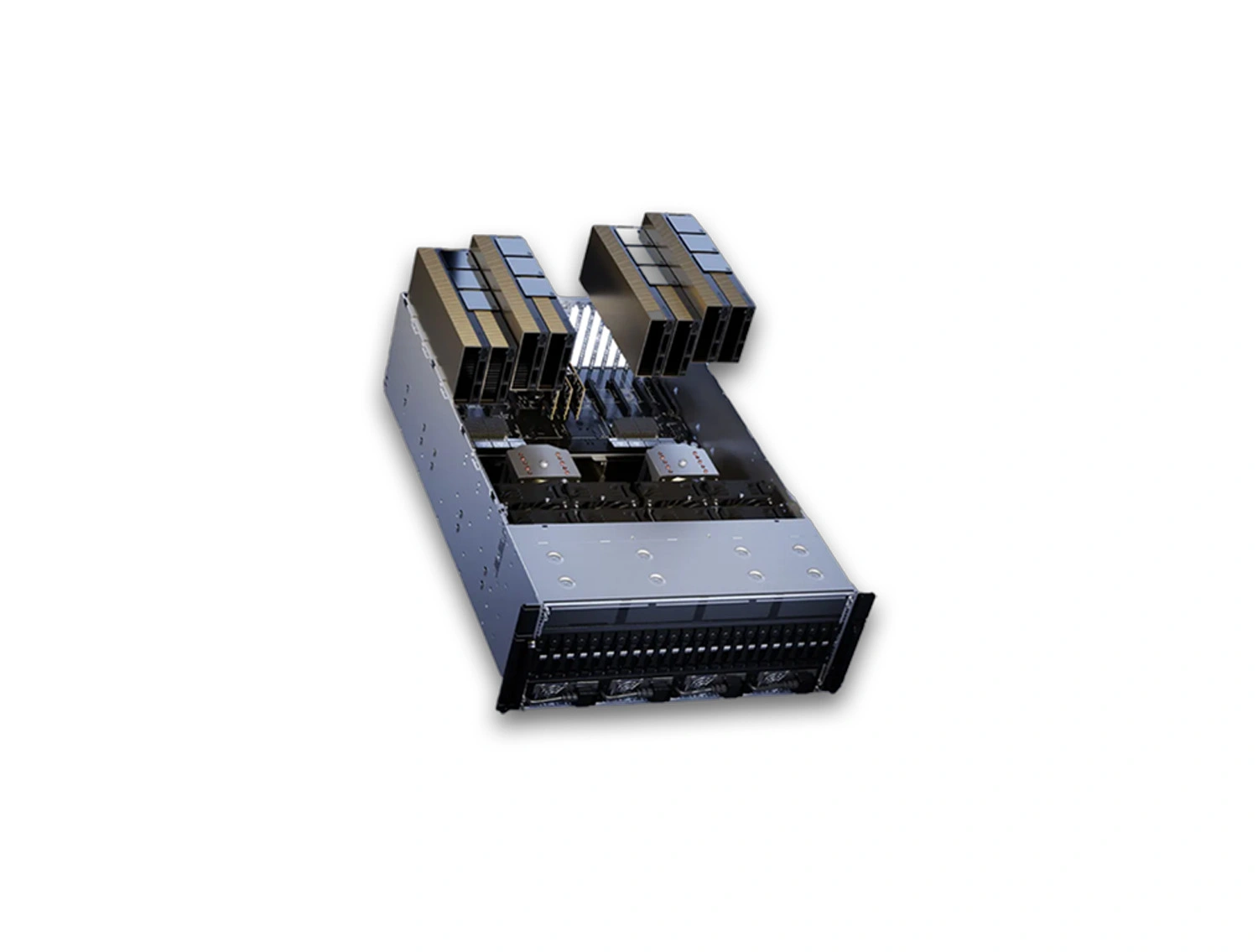

NVIDIA H200 Tensor Core GPU H200 NVL

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads.

NVIDIA H200 Tensor Core GPU Resources

Powering the Future of Generative AI and HPC

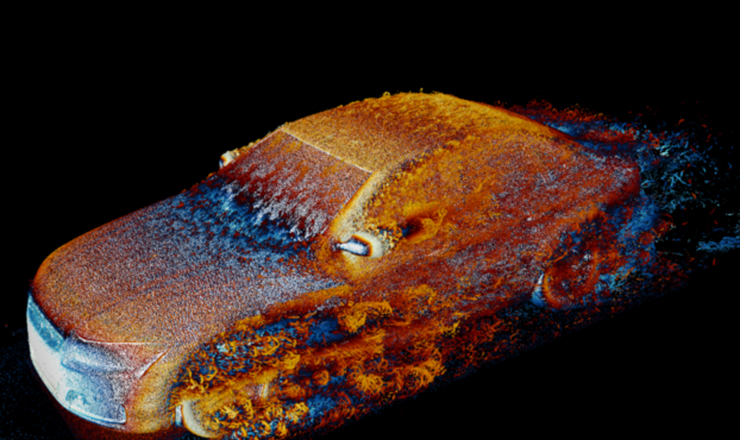

The NVIDIA H200 Tensor Core GPU is designed to supercharge both Generative AI and High-Performance Computing (HPC) workloads. With groundbreaking performance and memory capabilities, the H200 accelerates everything from large language models (LLMs) to scientific simulations.

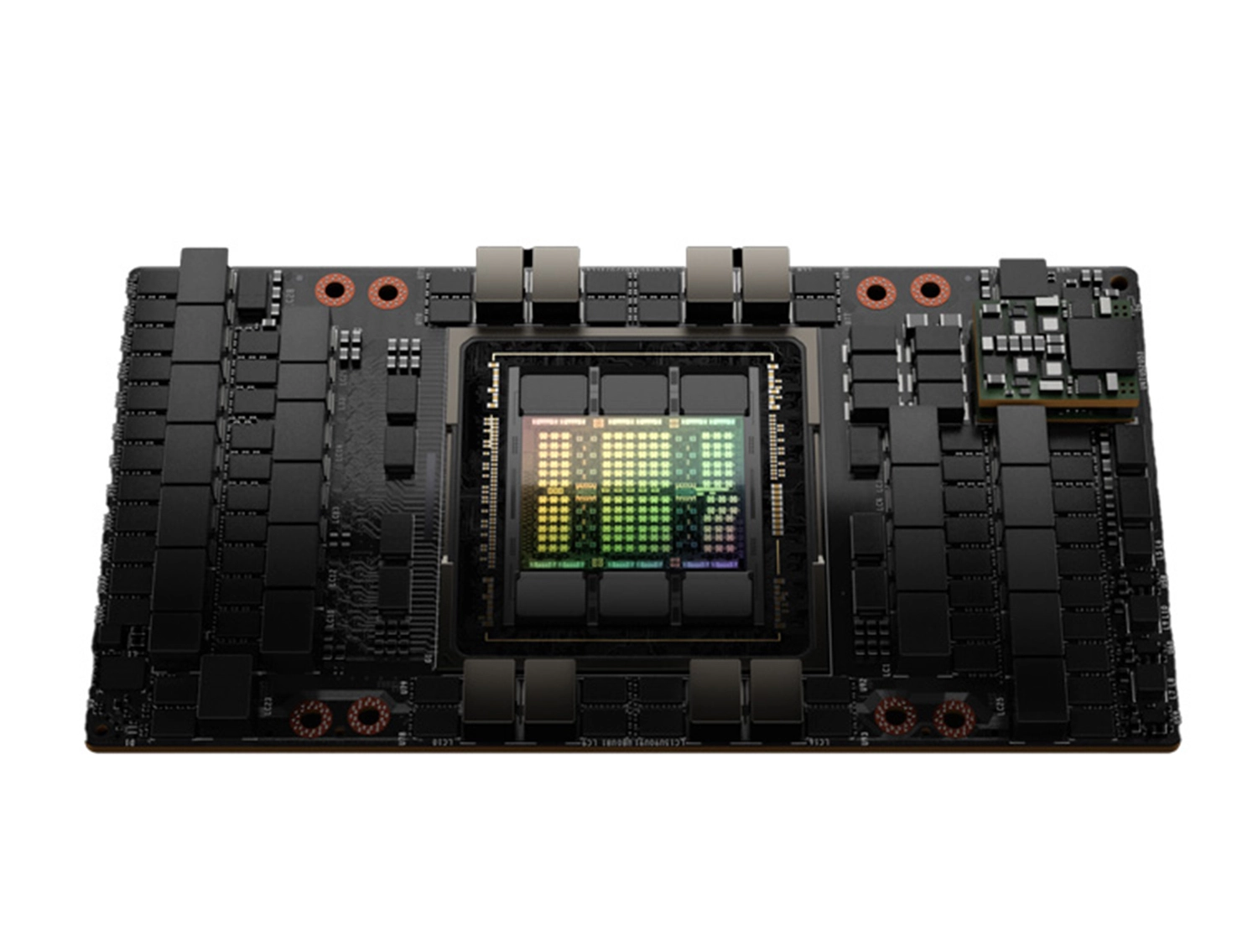

Higher Performance with Larger, Faster Memory

The NVIDIA H200 is built on the NVIDIA Hopper™ architecture and is the first GPU to feature 141GB of HBM3e memory at an incredible 4.8 terabytes per second (TB/s)—nearly double the capacity and 1.4X the memory bandwidth of the H100. This boost enables higher performance for both Generative AI and large-scale HPC workloads, all while improving energy efficiency and lowering the total cost of ownership.

Unlock Insights with High-Performance LLM Inference

The H200 doubles inference speed compared to the H100 when running large language models (LLMs) like Llama2. This leap in performance ensures faster and more efficient AI inference, making it ideal for massive AI inference workloads deployed at scale.

| Condition | Item Condition : Brand New |

|---|---|

| MFG Number | 900-21010-0040-000 |

| Price | $31,500.00 |

| Financing | No |

| Estimated time of Delivery | Delivery Begins in January - Pre-Order Now! |

| Product Card Description | 141GB GPU Memory, 989 TFLOPS TF32, 4.8TB/s Bandwidth—Configurable TDP Up to 600W for Extreme Power |

| Order Processing Guidelines | Order Processing Guidelines:

Inquiry First – Please reach out to our team to discuss your requirements before placing an order. |

| Special Price From Date | Jul 31, 2024 |

| Special Price To Date | Aug 30, 2024 |