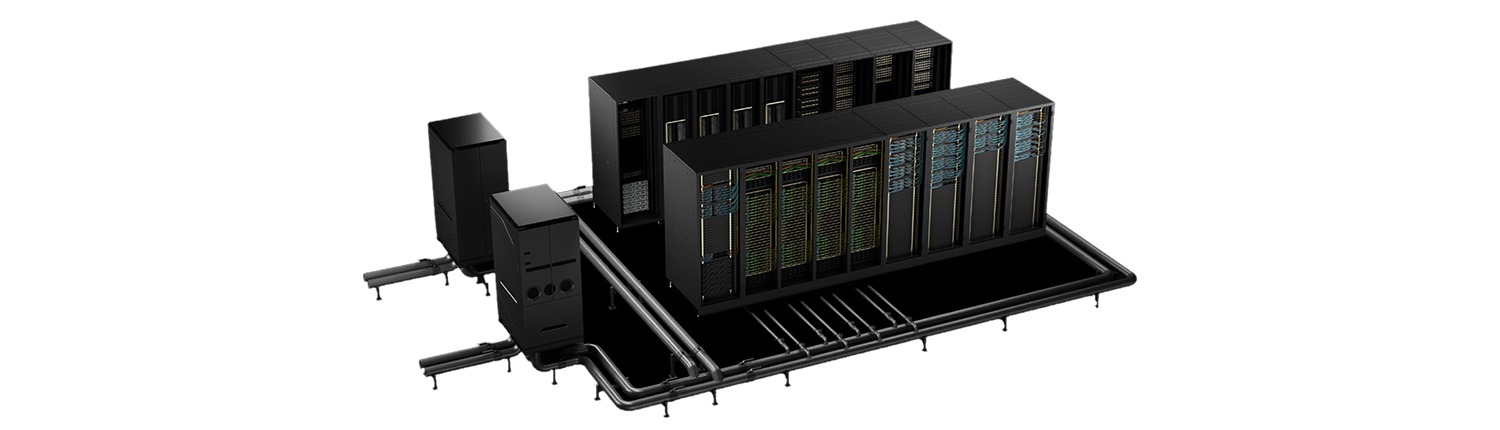

NVIDIA GB200 NVL72 AI Data Center

Rack-scale AI supercomputer: 72 NVIDIA Blackwell GPUs + 36 Grace CPUs, up to 13.4 TB HBM3e memory, 130 TB/s NVLink bandwidth — built for trillion-parameter LLM inference (30× vs H100) and large-scale model training.

AI Supercomputer for the Era of General Intelligence

The NVIDIA GB200 NVL72 delivers breakthrough AI performance by combining 72 NVIDIA Blackwell GPUs and 36 Grace CPUs in a single, rack-scale, liquid-cooled design. Built to power the next wave of generative AI, LLM inference, and digital twins, the GB200 NVL72 delivers over 1,000 petaFLOPS of AI performance per rack.

Unifying Compute, Memory, and Networking at Scale

Each GB200 Grace Blackwell Superchip integrates an NVIDIA Grace CPU and two NVIDIA Blackwell GPUs connected via fifth-generation NVLink. With 72 GPUs interconnected through NVLink Switch fabric, GB200 NVL72 acts as a single massive GPU for unparalleled scalability and throughput.

Liquid-Cooled Rack-Scale Efficiency

Designed for modern data centers, the GB200 NVL72 uses direct liquid cooling for exceptional energy efficiency. It reduces total power usage while maintaining maximum performance density, making it ideal for hyperscale and enterprise AI factories.

Key Features

- Powered by 72 NVIDIA Blackwell GPUs and 36 Grace CPUs

- Over 1,000 petaFLOPS of AI performance per rack

- Up to 1.44TB of GPU memory per NVL72 node

- Fifth-generation NVIDIA NVLink with 1.8TB/s bandwidth per GPU

- 130TB/s total NVLink switch fabric bandwidth

- Direct liquid cooling for maximum energy efficiency

- Integrated with NVIDIA Quantum-X800 InfiniBand and Spectrum-X Ethernet

- Supports NVIDIA AI Enterprise and NVIDIA Mission Control software

| MFG Number | GB200-NVL72 |

|---|---|

| Condition | Item Condition : Brand New |

| Price | $300,000.00 |

| Show Product Will Be Available Soon on FE | No |

| Product Card Description | The NVIDIA GB200 NVL72 is a liquid-cooled, data-centre-class AI server delivering unprecedented performance for generative AI, large language models and HPC workloads. With a 72-GPU NVLink domain, the system acts as a unified accelerator delivering up to 1,440 PFLOPS FP4, 720 PFLOPS FP8, and supports massive 13.4 TB HBM3e memory and 130 TB/s high-speed interconnect. Designed for real-time trillion-parameter inference (30× H100) and large-scale training (4× H100), this rack-scale solution enables hyperscale AI infrastructure with extreme throughput and efficiency. |

| Order Processing Guidelines | Order Processing Guidelines:

Inquiry First – Please reach out to our team to discuss your requirements before placing an order. |