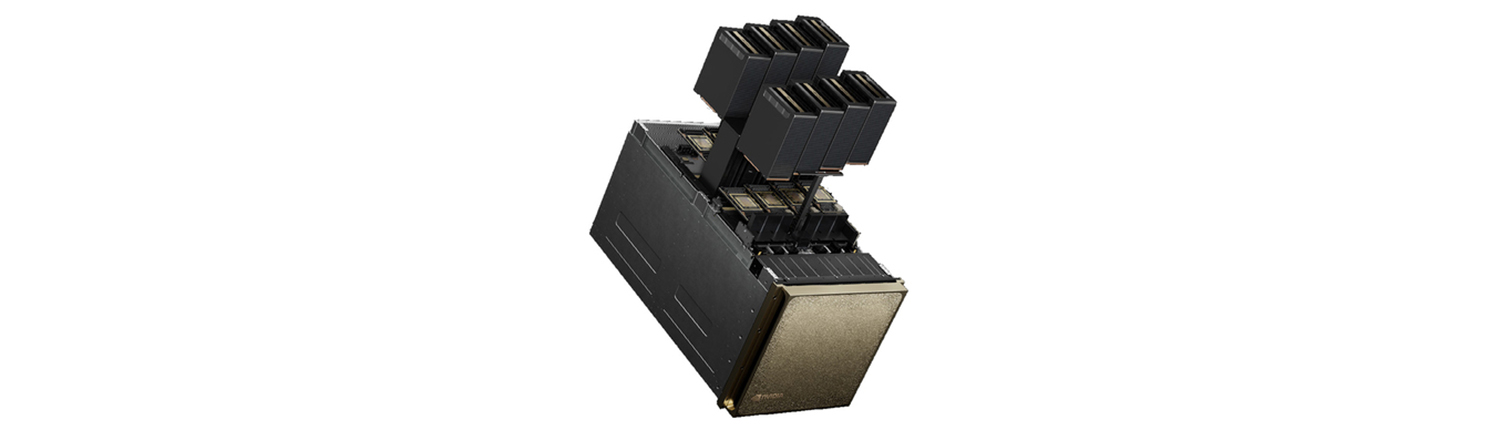

NVIDIA DGX B200 AI Server

The NVIDIA DGX B200 AI Server delivers next-level performance for AI, deep learning, and generative AI. Powered by NVIDIA Blackwell GPUs, it is engineered to accelerate enterprise-scale AI innovation with unmatched speed, efficiency, and scalability.

The Proven Standard for Enterprise AI

Built from the ground up for enterprise AI, the NVIDIA DGX™ platform, featuring NVIDIA DGX SuperPOD™, combines the best of NVIDIA software, infrastructure, and expertise in a modern, unified AI development solution—powering next-generation AI factories with unparalleled performance, scalability, and innovation.

Enterprise Infrastructure for Mission-Critical AI

NVIDIA DGX B200 AI server is purpose-built for training and inferencing trillion-parameter generative AI models. Designed as a rack-scale solution, each liquid-cooled rack features 36 NVIDIA GB200 Grace Blackwell Superchips—36 NVIDIA Grace CPUs and 72 Blackwell GPUs—connected as one with NVIDIA NVLink™. Multiple racks can be connected with NVIDIA Quantum InfiniBand to scale up to hundreds of thousands of GB200 Superchips.

Maximize the Value of the NVIDIA DGX Platform

NVIDIA Enterprise Services provide support, education, and infrastructure specialists for your NVIDIA DGX infrastructure. With NVIDIA experts available at every step of your AI journey, Enterprise Services can help you get your projects up and running quickly and successfully.

Unparalleled Performance with Blackwell Architecture

Powered by the NVIDIA Blackwell architecture’s advancements in computing, DGX B200 delivers 3X the training performance and 15X the inference performance of DGX H100. As the foundation of NVIDIA DGX BasePOD™ and NVIDIA DGX SuperPOD™, DGX B200 delivers leading-edge performance for any workload.

| MFG Number | DGXB-G1440+P2EDI36 |

|---|---|

| Condition | Item Condition : Brand New |

| Price | $300,000.00 |

| Show Product Will Be Available Soon on FE | No |

| Product Card Description | Next-generation AI server with NVIDIA Blackwell GPUs, built for enterprise-scale AI and generative AI workloads. |

| Order Processing Guidelines | Order Processing Guidelines:

Inquiry First – Please reach out to our team to discuss your requirements before placing an order. |