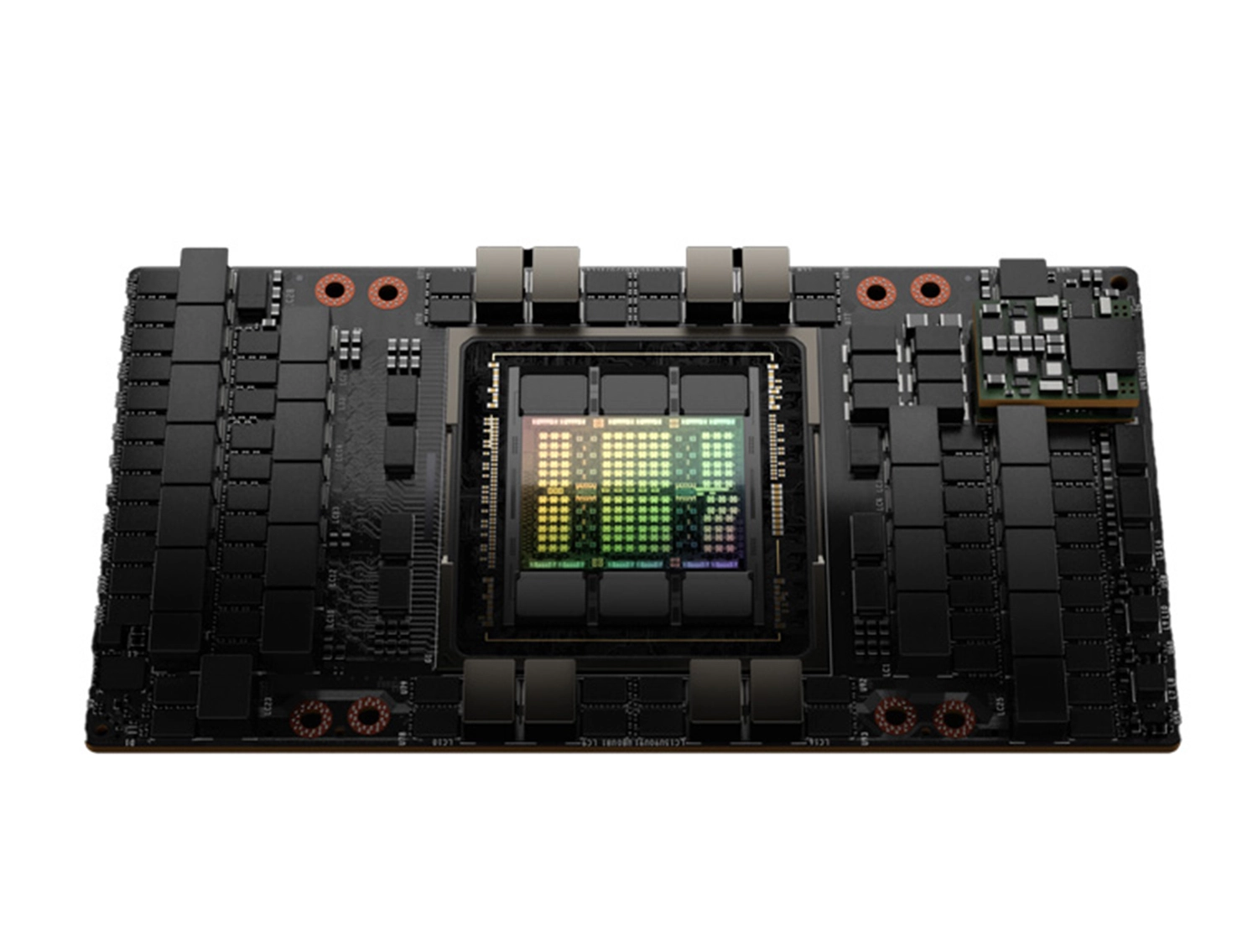

NVIDIA H100 Tensor Core GPU 80GB PCIe

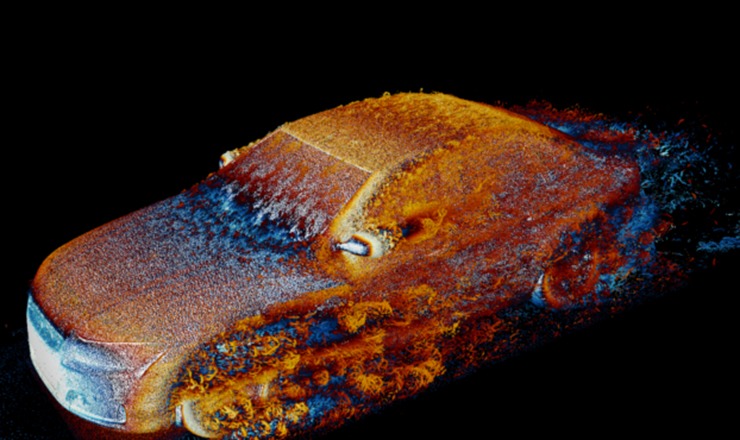

H100 features fourth-generation Tensor Cores and a Transformer Engine with FP8 precision that provides up to 4X faster training over the prior generation for GPT-3 (175B) models. The combination of fourth-generation NVLink, which offers 900 gigabytes per second (GB/s) of GPU-to-GPU interconnect; NDR Quantum-2 InfiniBand networking, which accelerates communication by every GPU across nodes; PCIe Gen5; and NVIDIA Magnum IO™ software delivers efficient scalability from small enterprise systems to massive, unified GPU clusters.

NVIDIA H100 Tensor Core GPU Resources

Order-of-Magnitude Leap for Accelerated Computing

The NVIDIA H100 Tensor Core GPU offers breakthrough performance, scalability, and security. Powered by the NVIDIA Hopper™ architecture, H100 delivers industry-leading speed for conversational AI, accelerating large language models (LLMs) by 30X.

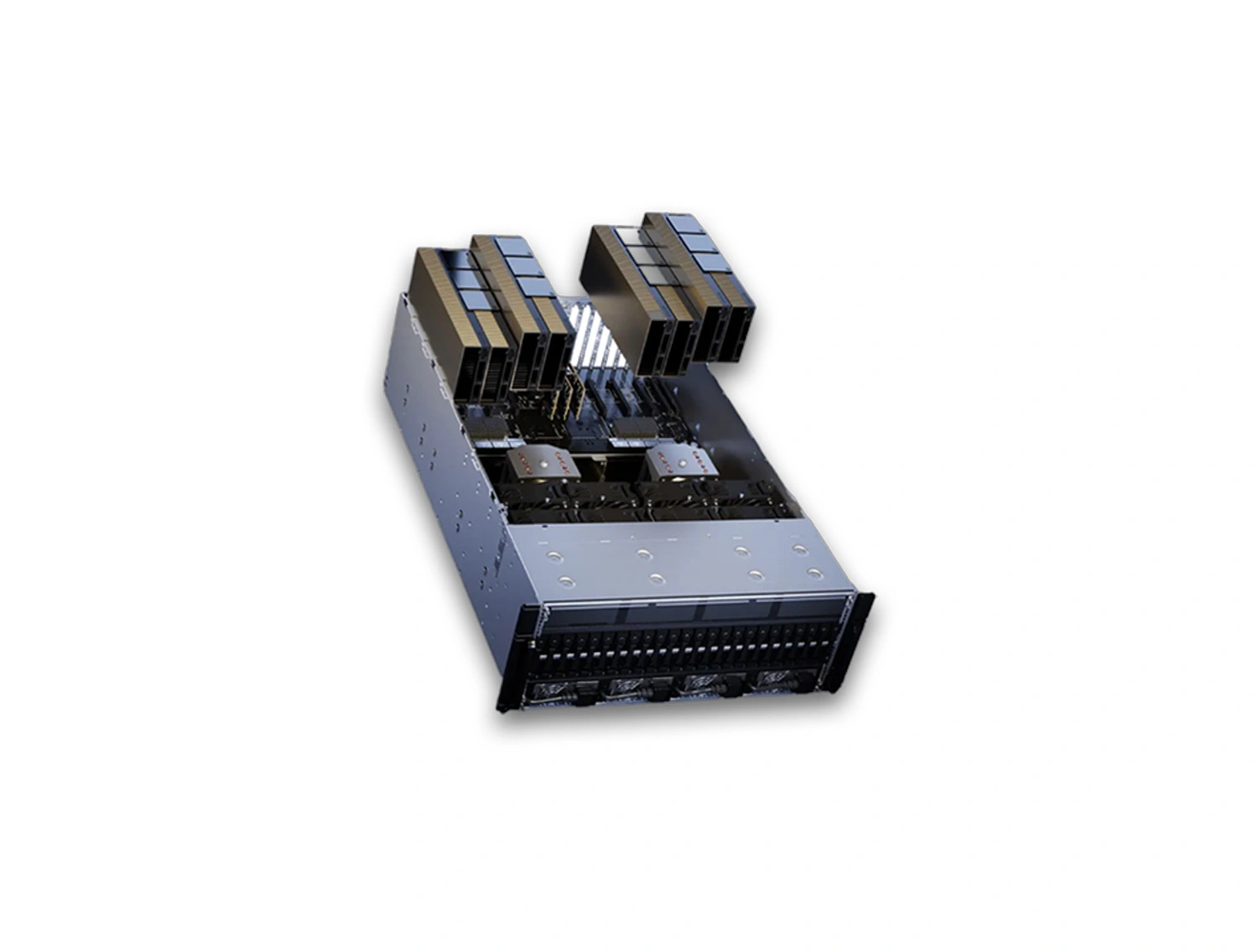

Powering Advanced Workstations and Servers for Professional Applications

The H100 is designed for high-end workstations and servers, delivering up to 4X faster training for GPT-3 (175B) models and tripling the FLOPS for double-precision Tensor Cores. Ideal for both enterprise and exascale data centers, H100 includes features like second-generation Multi-Instance GPU (MIG), NVIDIA NVLink Switch System, and Confidential Computing to securely accelerate workloads across all environments.

Supercharge Large Language Model Inference with H100 NVL

The NVIDIA H100 NVL, featuring NVLink and 188GB of HBM3 memory, optimizes performance for large language models (LLMs) like Llama 2 (70B). Achieving up to 5X better performance than the previous A100 systems, H100 NVL ensures low latency in power-constrained environments.

| Condition | Item Condition : Brand New |

|---|---|

| MFG Number | 900-21010-6200-030 |

| Price | $29,499.00 |

| Product Card Description | 80GB GPU Memory, 756 TFLOPS TF32, 2TB/s Bandwidth—Configurable TDP Up to 350W for Ultimate Performance |

| Order Processing Guidelines | Order Processing Guidelines:

Inquiry First – Please reach out to our team to discuss your requirements before placing an order. |

| Special Price | $18,500.00 |

| Special Price From Date | Jul 31, 2024 |