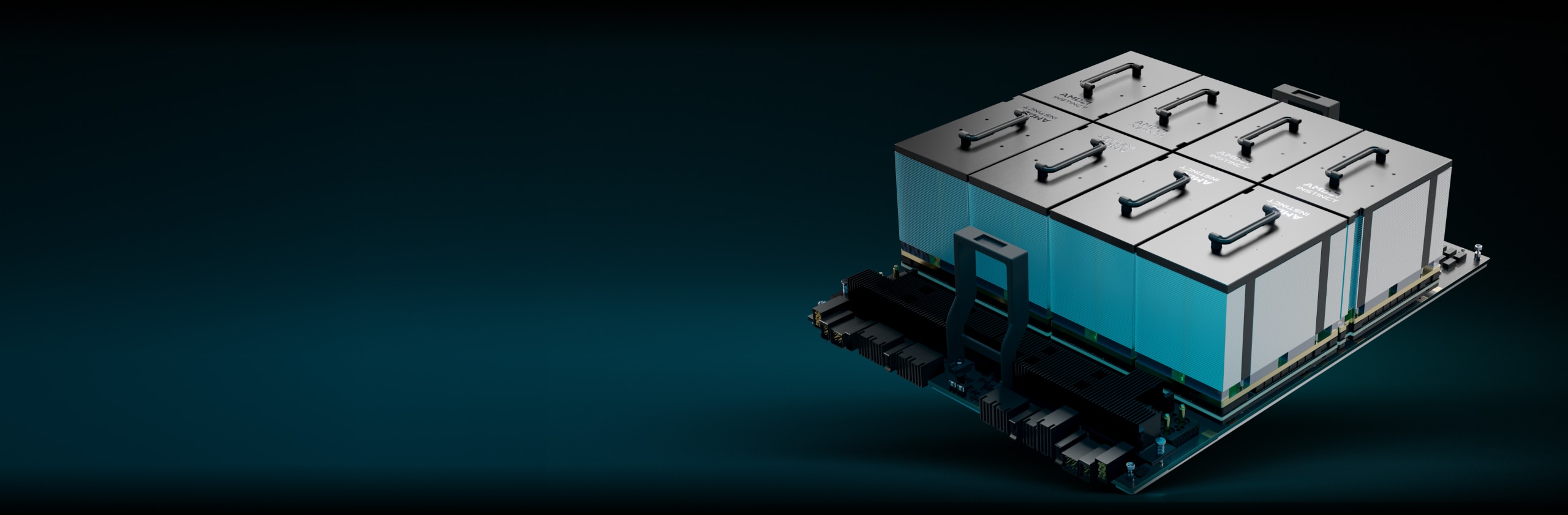

AMD Instinct™ MI300X Platform

- Buy 100 for $25,200.00 each and save 10%

The AMD Instinct™ MI300X platform is designed to deliver exceptional performance for AI and HPC. AMD Instinct MI300 Series accelerators are built on AMD CDNA™ 3 architecture, which offers Matrix Core Technologies and support for a broad range of precision capabilities—from the highly efficient INT8 and FP8 (including sparsity support for AI), to the most demanding FP64 for HPC.